Models have figured heavily in government responses to the coronavirus. This has given us the opportunity for a real-time lesson in the uses of models. In the process, we’ve learned some important lessons in how to best make use of models — and equally importantly, in how not to use them.

That’s directly relevant to environmental regulation. We rely on models in environmental law for many purposes: predicting how carbon emissions will impact climate, determining where air pollution will travel, projecting how the electricity system will respond to an increase in renewable energy, determining how an endangered species will respond to habitat changes, and anticipating how overall emissions will be affected by fuel efficiency standards for new cars. The most sophisticated of these models, and the most politically controversial, are large-scale models of climate. Whether the models are sophisticated or crude, they all pose the question of how to policymakers should make appropriate use of them.

Recent experience with the coronavirus drives home some important lessons about using models. Policymakers have needed the models to make forecasts about the needs for hospital space and medical supplies, and to project the effects of tightening or relaxing social distancing. It’s easy for policymakers — and for us as members of the public — to go wrong in considering models. Here are some important five things to remember:

- Don’t rely on a single model. Every model oversimplifies reality in the hope of making it manageable, just as a map is different from a detailed aerial photographic. Different models make different simplifying assumptions, and each captures some but not all of the reality of the situation. A model that is good for making short-term predictions about hospitalizations may not be good at long-term predictions about epidemic numbers, and vice versa.

- “Confidence levels” don’t really measure confidence. We often see statements that a model gives a 95% likelihood that some future event (maybe the number of coronavirus deaths) will be within a certain range. That means that, if the model and if the input data are valid, there is only a 5% chance that the future event will be outside that range. The modelers themselves are perfectly aware that the data may be flawed and that their model may not be correct. You should be, too.

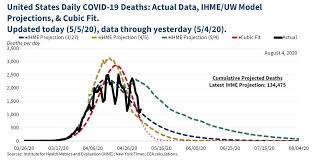

- Don’t home brew your own. Models have their limitations, but don’t think you can do better on your own out of pure raw intelligence. You might possibly not be right about your intelligence, but even if you are, things will probably end badly. Think of law professor Richard Epstein’s prediction of 5000 U.S. deaths, and 50,000 worldwide. Or economist Kevin Hassett’s more recent cubic model (shown in the picture accompanying this post) predicting that the disease would vanish by mid-May.

- Trust the models, not your gut. Your gut is great at digesting proteins but not so good at math. Case in point: “when you have 15 people, and the 15 within a couple of days is going to be down to close to zero, that’s a pretty good job we’ve done.” The models may go wrong, but at least they’re based on data. Recently, models of coronavirus spread with very different structures have started converging in their predictions, which should at least somewhat increase our confidence in their forecasts.

- Beware of perfection. The perfect model is an aspiration, not a reality, at least outside of theoretical physics. Corollary: if a model’s correspondence with the facts looks too to be true, probably is too good to be true. It may be an exercise in curve fitting, like Hassett’s cubic model, meaning it’s simply designed to fit past data and says little about the future. (You see this sometime with people who know some statistics and are just trying to regress global temperatures against something else.) Or else the data is being massaged to fit the model. (Climate skeptics are often guilty of this.) Neither one is good.

- Don’t cherry pick. The Trump Administration has constantly favored the most optimistic model it can find, often the IMHE model. Others might favor the most pessimistic model out of a “better safe than sorry mentality.” Neither approach is giving you an accurate perspective. It’s so, so tempting to ignore the ones whose outcome just seems “wrong” — for instance, the climate models that seems too optimistic or the disease models that say the epidemic will stop sooner than we expected. But it’s a mistake.

We’ve seen a lot of mistakes in the use of models, many of them from the Administration. Many of them are easy mistakes to fall into even if you know better. Be careful.

The post Using and Abusing Models: Lessons from COVID-19 appeared first on Legal Planet.

By: Dan Farber

Title: Using and Abusing Models: Lessons from COVID-19

Sourced From: legal-planet.org/2020/05/20/modeling-the-future-lessons-from-covid-19/

Published Date: Wed, 20 May 2020 14:19:50 +0000

Vist Maida on Social Me

Website Links

Maida Law Firm - Auto Accident Attorneys of Houston, by fuseology

No comments:

Post a Comment